Exploring Segment Anything Model 2 (SAM 2) with Gradio

Introduction

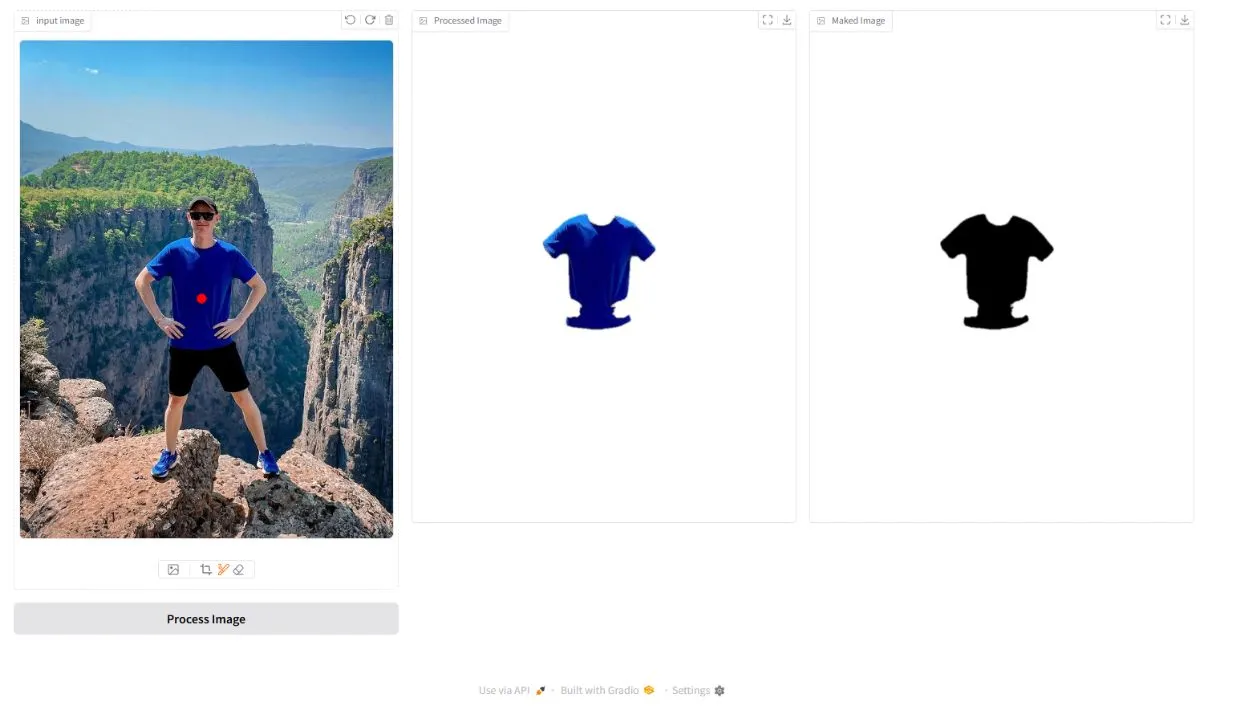

I recently explored the Segment Anything Model 2 (SAM 2) developed by Meta, and I am highly impressed by its remarkable capabilities. This advanced model facilitates image segmentation by taking a NumPy array representation of an image and corresponding point coordinates as input. However, obtaining these coordinates from users can be a challenge.

To streamline this process, I integrated Gradio, an intuitive interface for deploying machine learning models, making it more accessible for end users.

Extracting Coordinates from Images

One of the key challenges was efficiently extracting the desired coordinates. Instead of manually obtaining them, I leveraged image layers stored in a NumPy array and applied filters to identify clusters that resemble red dots. This technique allowed me to successfully extract precise coordinate points from the image.

Isolating Points Using the Output Mask

Once the coordinates were identified, I utilized the output mask generated by SAM 2 to isolate the specified points from the original image. This resulted in a masked version of the image, highlighting the selected regions effectively.

Benefits of This Approach

- Automated Feature Extraction – No need for manual input; coordinate extraction is data-driven.

- Efficient Image Segmentation – Leverages deep learning to achieve high precision.

- Seamless Deployment with Gradio – Provides an easy-to-use web interface for users to interact with the model.

- Flexible Application – Can be extended to various segmentation tasks, from medical imaging to object detection.

Conclusion

By integrating SAM 2 with Gradio and leveraging NumPy-based processing, I streamlined the process of extracting segmentation coordinates and generating precise masks. This approach showcases the potential of deep learning models in real-world applications, making segmentation more efficient and user-friendly.

Hashtags

#SAM2 #DeepLearning #Gradio #AI #NumPy